ARPANET’s success begat ALOHANET, SATNET, PRNET and other packet switched networks. It was clear that ARPANET's network protocol would have to be revised in order to facilitate interconnection. In 1972, Vint Cerf and Bob Kahn released A Protocol for Packet Network Interconnection. The design objective of the Internet was to enable interconnection between otherwise incompatible networks, promoting the research and innovation occurring at the edges, and leveraging "network effect." IP made interconnection easy and coordination unnecessary. According to Bob Kahn,

The idea of the Internet was that you would have multiple networks all under autonomous control. By putting this box in the middle, which we eventually called a gateway, it would allow for the federation of arbitrary numbers of networks without the need for any change made to any particular network. So if BBN had one network and AT&T had another, it would be possible to just plug the two together with a [gateway] box in the middle, and they wouldn't have to do anything to make that work other than to agree to let their networks be plugged in.[SEGALLER, NERDS 2.0.1: A BRIEF HISTORY OF THE INTERNET at 111]

The Internet reflected a culture where connectivity was paramount. With each interconnection of an additional network, the value of the Internet grew. [Carpenter, Architectural Principles of the Internet, IETF RFC 1958, Sec. 2.1 ("the goal is connectivity, the tool is the Internet Protocol, and the intelligence is end to end rather than hidden in the network. The current exponential growth of the network seems to show that connectivity is its own reward.")] [THE INTERNET'S COMING OF AGE, COMPUTER SCIENCE AND TELECOMMUNICATIONS BOARD, NATIONAL RESEARCH COUNCIL 35 (2001) ("the value placed on connectivity as its own reward favors gateways and interconnections over restrictions on connectivity")] [REALIZING THE INFORMATION FUTURE: THE INTERNET AND BEYOND, COMPUTER SCIENCE AND TELECOMMUNICATIONS BOARD, NATIONAL RESEARCH COUNCIL 3 (1994) (setting forth vision for Open Data Networks, stating in first principle that network should "permit[] universal connectivity.")] [David Clark, A Cloudy Crystal Ball: Visions of the Future, Presentation at the IETF, Slide 4 (July 1992) ("Our best success was not computing, but hooking people together").]

In 1985, the National Science Foundation concluded that the Internet was good, and sought to expand its reach to the greater academic community. Instead of providing the entire end-to-end network, NSF elected to supply a crucial piece: the first dedicated nationwide Internet backbone. The NSFNET interconnected with regional networks, and the regional networks interconnected with local networks. In so doing, NSFNET offered long-distance transit as well as traffic exchange between networks.

Commercial networks concluded that the Internet was good. Commercial traffic, however, could not be exchanged across NSFNET. Therefore, the early commercial networks established the Commercial Internet eXchange (CIX) and exchanged traffic on a settlement-free basis. For these early commercial networks, connectivity was paramount and growth of access services was king. [Brock, Economics of Interconnection at ii ("Commercial Internet service providers agreed that interchange of traffic among them was of mutual benefit and that each should accept traffic from the other without settlements payments or interconnection charges. The CIX members therefore agreed to exchange traffic on a "sender keep all" basis in which each provider charges it own customers for originating traffic and agrees to terminate traffic for other providers without charge.").]

The US Government concluded that the Internet was good, and sought to make it available to all. NSF was tasked with privatizing the Internet. In order to be successful, NSF had to establish a means for emerging commercial networks to exchange traffic. Following the CIX model, NSF built four Internet exchange points, known as NAPs, in Washington, D.C., New York, Chicago, and San Jose. [National Science Foundation Solicitation 93-52, Solicitation for Network Access Point Manager, Routing Arbiter, Regional Network Providers, and Very High Speed Backbone Network Services Provider for NSFNET and NREN Program (May 6, 1993) (setting forth NSF's plan for privatizing NSFNET).] [GREENSTEIN, HOW THE INTERNET BECAME COMMERCIAL at 82 (NAPs were modeled on CIX and "helped the commercial Internet operate as a competitive market after the NSFNET shut down.").]

In 1995, NSF decommissioned the NSFNET and the commercial Internet was born in its image. There were Tier 1 backbone networks (WANs) that provided nationwide or global service, Tier 2 networks (MANs, Metro) that provided regional service, and Tier 3 networks (LANs, Local) that provided access service. Traffic from one end-user to another end-user would travel up the topology from Tier 3 networks to be exchanged at the Tier 1 level, and then travel back down. Within networks was robust capacity moving traffic. [See David Young, Why is Netflix Buffering? Dispelling the Congestion Myth, VERIZON PUBLIC POLICY BLOG (July 10, 2014) (diagramming Verizon network with backbone, metro and local networks)] Between networks was interconnection where capacity could be constrained. Interconnection capacity constituted an aggregation of all traffic from the different end-users, services, and firms to which a network provider offered service. It could become a traffic pinch-point. In order to avoid congestion, interconnection partners would have to cooperate. [See KLEINROCK, REALIZING THE INFORMATION FUTURE: THE INTERNET AND BEYOND at 183 ("Because the network is not implemented as one monolithic entity but is made of parts implemented by different operators, each of these entities must be separately concerned with achieving good loading of its links, avoiding congestion, and making a profit. The issue of sharing and congestion arises particularly at the point of connection between providers. At this point, the offered traffic represents the aggregation of many individual users, and thus cost-effective sharing of the link can be assumed. However, if congestion occurs, one must eventually push back on original sources. Options include pricing and technical controls on congestion; there may be other mechanisms for shaping consumer behavior as well.”)]

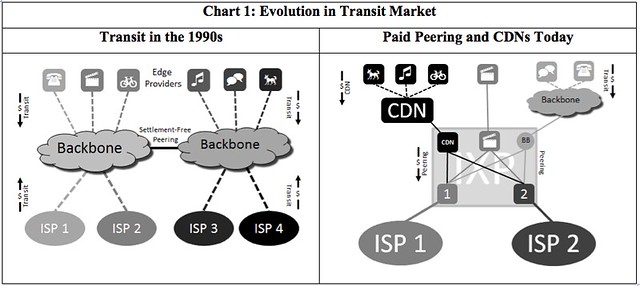

Early academic and government networks focused on research, development, and innovation, not commercial competition; they developed a simple accounting scheme for interconnection: settlement-free peering. Early commercial backbone providers adopted settlement-free peering as a means of rapidly growing their business plans. Access networks, which needed to provide full Internet service to their customers, interconnected with and paid transit to commercial backbone providers.

The Internet interconnection market would then undergo seismic evolution. By 2010, large access providers had reestablished a strong position in the network ecosystem, and leveraged network effect and interconnection to implement strategic goals. Broadband Internet access service providers were able to reverse the flow of interconnection settlement fees, going from transit customers to gatekeepers, charging paid peering for access to their end-users. The interconnection market had departed from the characteristics of the early commercial Internet, where backbone networks were king and the market was hyper-competitive, and returned to traditional consolidated network market economics. [See generally WU, THE MASTER SWITCH: THE RISE AND FALL OF INFORMATION EMPIRES (discussing how communications markets go through cycles of innovation and disruption, competition, and then consolidation and market power).]

No comments:

Post a Comment